Scientists predict human-level artificial intelligence by 2040.

Maybe sooner if the bar keeps dropping.

The Problem of Dirty Data and Its Impact on Businesses

Dirty data is a common issue faced by businesses of all sizes. It refers to data that is poorly formatted, contains errors or inconsistencies, or is otherwise unreliable. When such data is ingested into a system without proper cleaning and standardization, it can lead to a range of problems, including:

- Incorrect calculations and flawed decision-making

- Damage to reputation and loss of customers and revenue

- Mission-critical systems failing to function properly

- Data breaches and other security issues

- Legal and regulatory issues, fines, and penalties

- Loss of productivity and efficiency

As you can see, dirty data can be costly and risky for businesses. It's important for organizations to take steps to address the problem and ensure that their data is of high quality.

Avoiding Dirty Data and Its Consequences

There are several approaches that businesses can take to avoid dirty data and its consequences. One key step is to establish data formatting standards that provide guidelines for how data should be formatted, including rules for formatting names, addresses, and other information.

It's also important to clean and correct existing data to conform to these standards. This process can be time-consuming and labor-intensive, often requiring manual work and expertise. New data sources should also follow the established standards, which may require cooperation with data providers and efforts to validate and ensure the quality of the data.

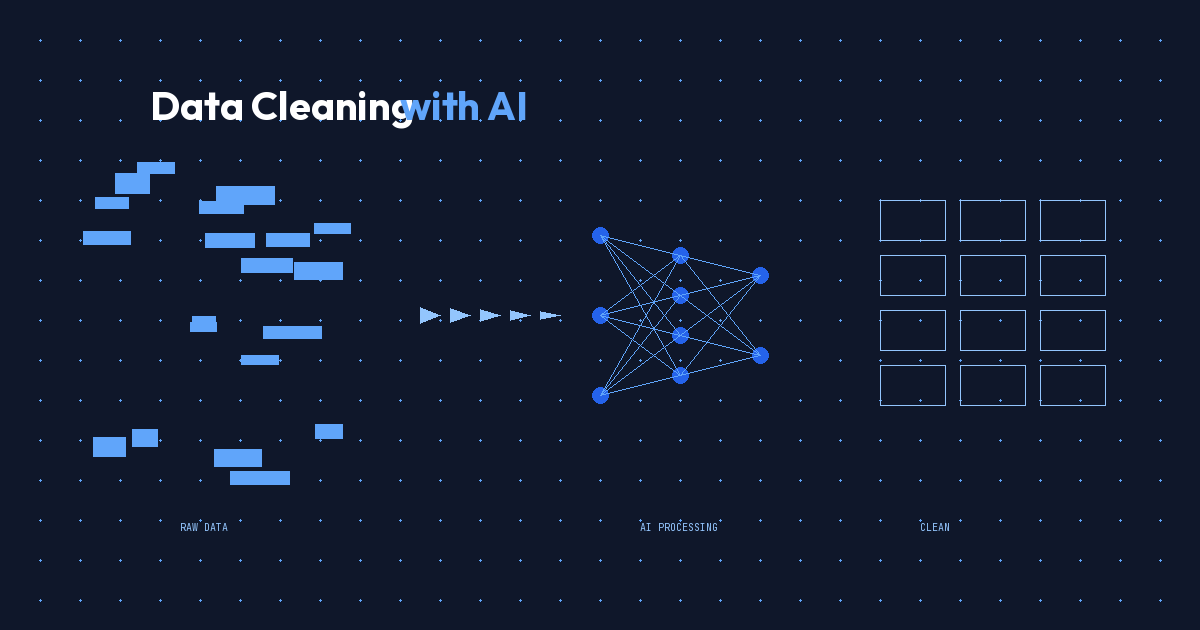

🤖 Using AI to Address Dirty Data

Artificial intelligence (AI) can be a cost-effective and reliable solution for tasks that previously required manual work, such as integrating new data sources or cleaning existing data. AI can automate processes such as data format validation, correction, and gap filling, which can be time-consuming and prone to errors when done manually. In fact, AI can often perform these tasks with higher accuracy and speed than humans.

One of the key benefits of AI is its ability to learn the structure of data from a sample and transform it into the desired format, filling gaps in a smart way. In this article, we will demonstrate how GPT-3, a natural language processing tool, can be used to clean and standardize data. While the code snippets provided are written in Python, the same approach can be applied using other programming languages.

🪄 GPT-3 Magically Fixes Dirty Data

As an example, consider a scenario where we have received a file which is implied to contain some information, but there is no format or schema defined.

name, surname, gender,e-mail,dob

Arseniy potapov, , M, "[email protected]", 1988-01-01

John,Doe ,, [email protected], 01/01/1979

Jane,Doe,,[email protected], "Jan 1, 1969"

John ,Smith , , John Smith <[email protected]>;, "1959 01 01"

,Mary Smith,F,[email protected],

,,M,unknow n email,The system expects certain fields:

- first name

- last name

- email address

- gender (M/F)

- date of birth (YYYY-MM-DD)

At first glance, it might seem that this data is irreparable. However, by using GPT-3, we can fix the data. The following code demonstrates how to trigger the GPT-3 API endpoint and provide it with a prompt asking it to convert and clean the data:

dirty_data = """

name, surname, gender,e-mail,dob

Arseniy potapov, , M, "[email protected]", 1988-01-01

John,Doe ,, [email protected], 01/01/1979

Jane,Doe,,[email protected], "Jan 1, 1969"

John ,Smith , , John Smith <[email protected]>;, "1959 01 01"

,Mary Smith,F,[email protected],

,,M,unknown email,

"""

prompt = f"""

Please convert the following data sample to the format suitable for the schema,

correct the format and fill the gaps with the correct values, where possible,mark invalid rows with the "invalid" flag.:

{dirty_data}

The schema is as follows:

first_name: string, first letter capitalized, required

last_name: string, first letter capitalized, required

email: string, plain email format ([email protected]), required

gender: string, M or F, required

date_of_birth: string, YYYY-MM-DD, optional

email,first_name,last_name,gender,date_of_birth,invalid

"""

api_key = os.environ["OPEN_AI_API_KEY"]

response = requests.post(

'https://api.openai.com/v1/completions',

json={

"model": "text-davinci-003",

"prompt": prompt,

"max_tokens": 2048,

"temperature": 0,

"top_p": 1.0,

"frequency_penalty": 0.0,

"presence_penalty": 0.0,

},

headers={

"Content-Type": "application/json",

"Authorization": f"Bearer {api_key}"

}

)

resp = response.json()

print(resp["choices"][0]["text"])

The resulting output is a well-formatted CSV file:

[email protected],Arseniy,Potapov,M,1988-01-01,

[email protected],John,Doe,M,1979-01-01,

[email protected],Jane,Doe,F,1969-01-01,

[email protected],John,Smith,M,1959-01-01,

[email protected],Mary,Smith,F,,

,,M,unknown email,invalidGPT-3 has done an impressive job of handling this data. It has correctly identified the most suitable columns for the data, corrected the formatting (such as capitalizing first letters and removing extra spaces), filled in missing gender values, marked invalid rows as such, and parsed various date formats and converted them to YYYY-MM-DD. All of this was achieved simply by providing GPT-3 with a prompt written in plain English.

AI Parses JSON Without Knowing Schema

[{"FNAME": "ALBUS", "LNAME": "DUMBLEDORE", "EMAIL": "[email protected]"},

{"FNAME": "HERMIONE", "LNAME": "GRANGER", "EMAIL": "[email protected]"},

{"FNAME": "RON", "LNAME": "WEASLEY", "EMAIL": "[email protected]"},

{"FNAME": "HARRY", "LNAME": "POTTER", "EMAIL": "[email protected]"}]was successfully converted to the following CSV:

[email protected],Albus,Dumbledore,,,

[email protected],Hermione,Granger,,,

[email protected],Ron,Weasley,,,

[email protected],Harry,Potter,,,Here, we can see that the AI was able to parse the JSON data without any hints about the schema. It correctly matched the columns and changed the format of the names.

However, it was unable to guess the genders, which may require some improvement to the prompt.

Schema Inference with GPT-3

The schema is a critical element in the data standardization process, as it defines the structure of the data and serves as a guideline of validating it. However, what if the content type & schema are unknown in advance, or if the schema is dynamic and changes over time?

In such cases, we can use GPT-3 to infer the schema from the data itself.

The following is an example of how to provide GPT-3 with a prompt that includes input data and a schema template with placeholders for the AI to fill:

Ticker,Date,Open,High,Low,Close,Adj Close,Volume

GOOG,2008-12-31,151.117126,154.495163,150.327271,152.83097800000004,152.83097800000004,5811000

GOOG,2009-01-02,153.30291699999995,159.870193,151.762924,159.621811,159.621811,7267900

GOOG,2009-01-05,159.462845,164.549759,156.482239,162.965073,162.965073,9841400

GOOG,2009-01-06,165.41413899999995,169.298874,162.140427,165.95065300000005,165.95065300000005,12933900

GOOG,2009-01-07,163.099197,164.38583400000005,158.345123,159.964584,159.964584,9047400

GOOG,2009-01-08,158.111633,161.54431200000005,157.644669,161.54431200000005,161.54431200000005,7248100

GOOG,2009-01-09,162.691849,162.691849,155.687408,156.517014,156.517014,8737300

{

"mimetype": "?",

"main_topic": "?",

"columns": [

{"name": "?", "type": "?"},

...

] // type: date | int | float | bool | null | str

}GPT-3 was able to generate the following output schema:

{

"mimetype": "text/csv",

"main_topic": "stock market",

"columns": [

{"name": "Ticker", "type": "str"},

{"name": "Date", "type": "date"},

{"name": "Open", "type": "float"},

{"name": "High", "type": "float"},

{"name": "Low", "type": "float"},

{"name": "Close", "type": "float"},

{"name": "Adj Close", "type": "float"},

{"name": "Volume", "type": "int"}

]

}By simply providing GPT-3 with an example of the input data and a template for the desired output schema, the AI was able to accurately infer the structure of the data and detect that it relates to the stock market.

This demonstrates the impressive capabilities of GPT-3 and its potential for automating tasks that would otherwise require manual effort.

🤔 How does it work?

GPT-3 is a language model that has been trained on a large amount of text data. It can generate text based on a given input, called a prompt. The prompt should be clear and unambiguous in order to get the best results from GPT-3.

In practice, this means that by providing GPT-3 with input data and instructions, it can generate text attempting to match the prompt as good as possible. It's important to understand that AI does not "think", but rather it "knows" what a plausible answer should look like based on its training data.

Surprisingly, slight changes to the prompt can result in different output, so it's important to be specific in the instructions provided to GPT-3. Additionally, the length of the prompt can affect the cost of the API call, so it's beneficial to keep it as concise as possible.

Overall, GPT-3 is an impressive technology that can be a valuable tool for solving tasks that require a high level of intelligence and automation.

Conclusion

In this article, we explored the problem of dirty data and its impact on businesses. We discussed the importance of establishing data formatting standards and the challenges of cleaning and standardizing data. We also highlighted the potential of AI, specifically GPT-3, to automate and improve the process of cleaning and standardizing data.

By using GPT-3, businesses can save time, reduce the risk of errors, and improve the quality and reliability of their data. However, it's important to keep in mind that AI is not a replacement for human intelligence, but rather a tool to assist in tasks that are repetitive or otherwise not well-suited to humans.

By using AI wisely, businesses can improve their efficiency and productivity while also leveraging the unique strengths of their human employees.